ManyLLM

Run many local models. In one simple workspace.

Listed in categories:

Artificial IntelligenceDeveloper ToolsGitHub

Description

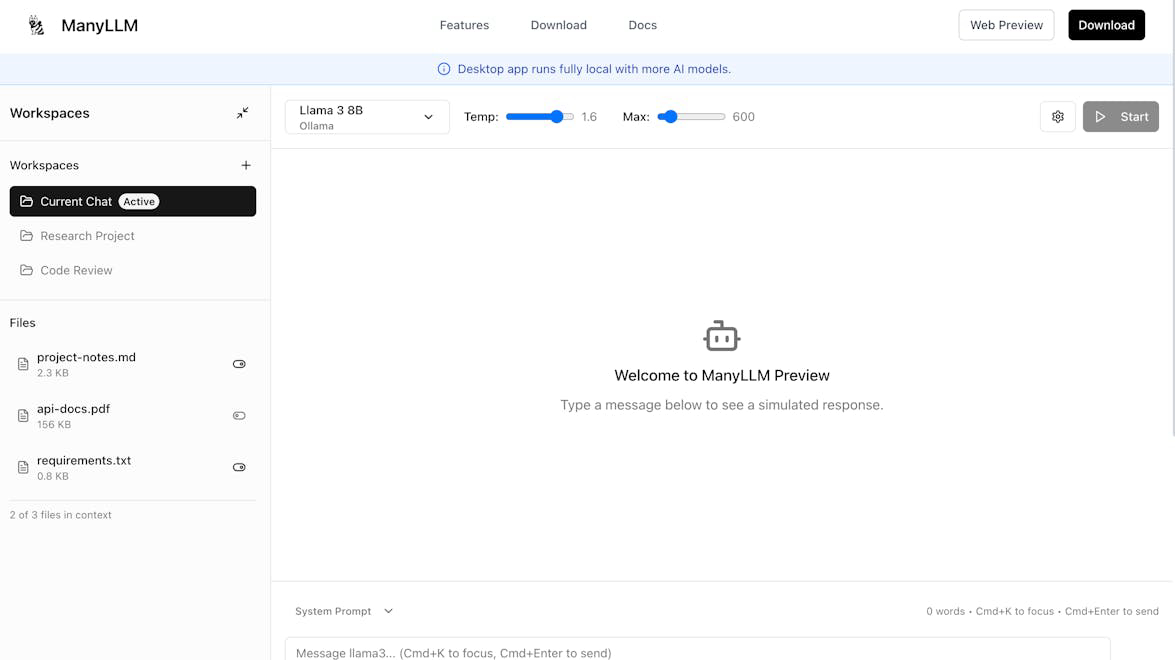

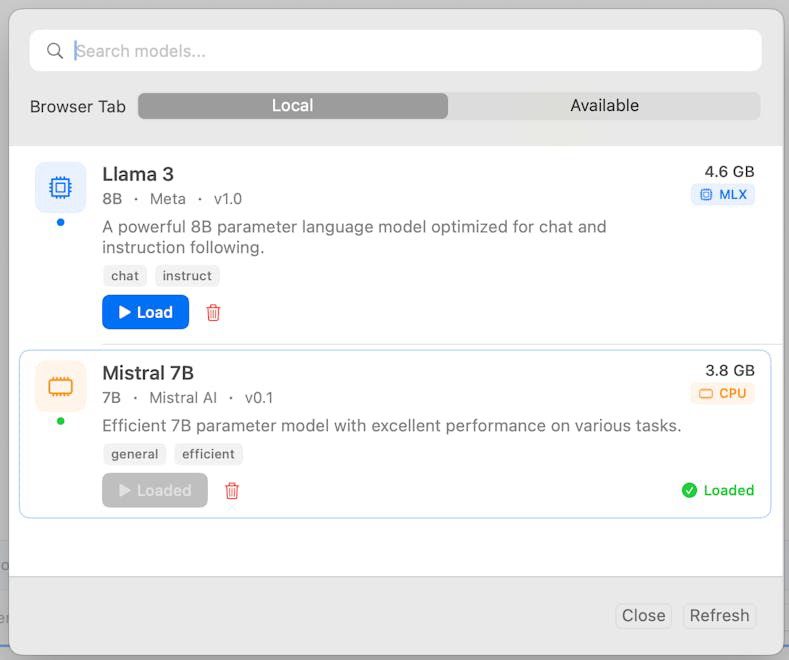

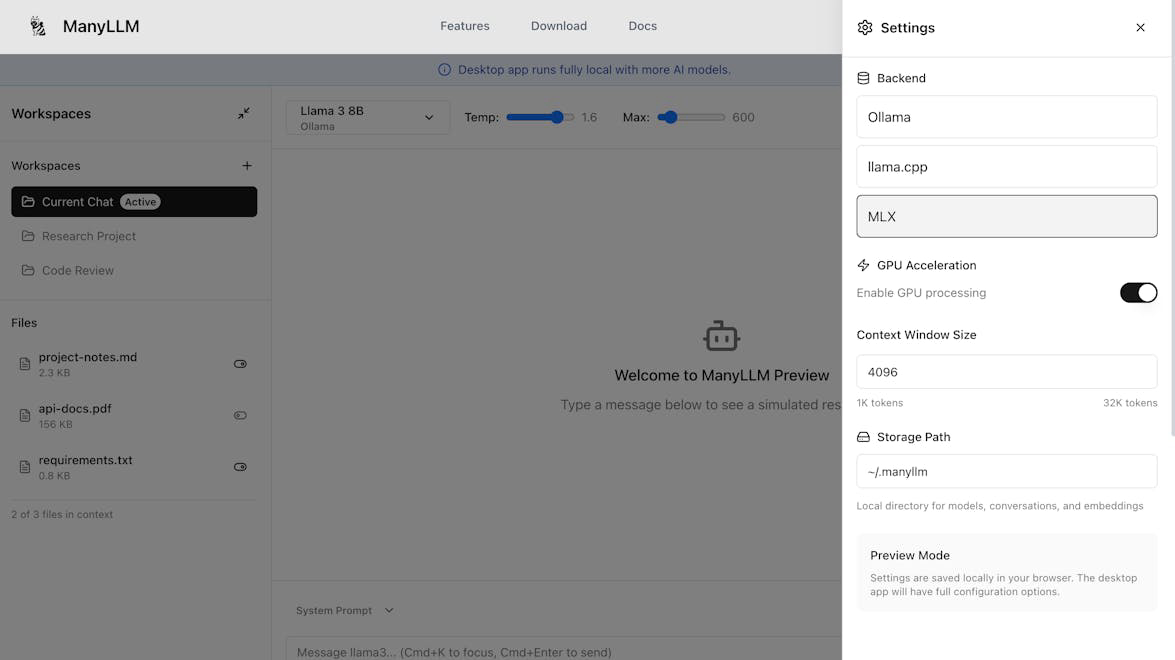

ManyLLM is a versatile platform designed for running multiple local AI models in a single workspace, prioritizing user privacy and ease of use. It offers a unified chat interface and supports local retrieval-augmented generation (RAG) capabilities, making it ideal for developers, researchers, and privacy-conscious teams.

How to use ManyLLM?

To use ManyLLM, simply pick a model from the available local options, start chatting through the unified interface, and add files for context by dragging and dropping them into the workspace.

Core features of ManyLLM:

1️⃣

Run many local models

2️⃣

Unified chat interface

3️⃣

Local-first privacy

4️⃣

OpenAI-compatible API

5️⃣

Zero cloud by default

Why could be used ManyLLM?

| # | Use case | Status | |

|---|---|---|---|

| # 1 | Developers building AI applications | ✅ | |

| # 2 | Researchers experimenting with local models | ✅ | |

| # 3 | Privacy-conscious teams managing sensitive data | ✅ | |

Who developed ManyLLM?

ManyLLM is developed by a community-focused team dedicated to providing local-first AI tools that prioritize user privacy and flexibility.