MiniCPM 4.0

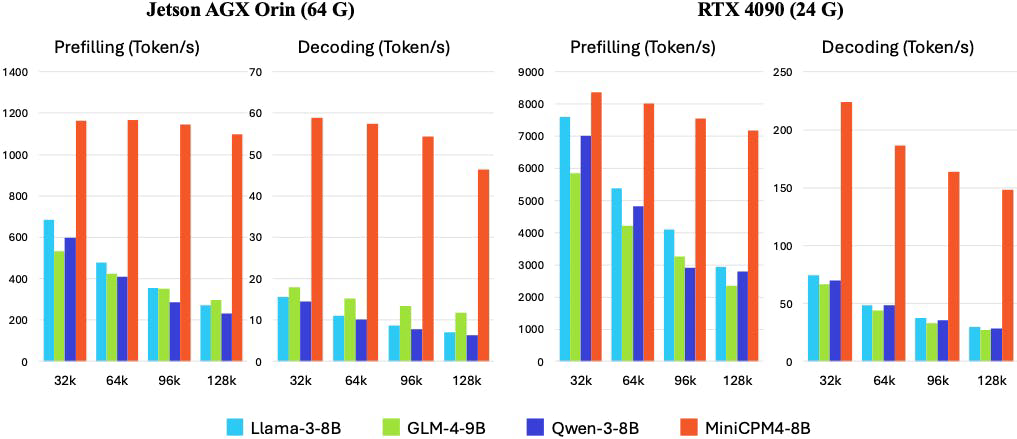

Ultra-efficient on-device AI, now even faster

Listed in categories:

Artificial IntelligenceOpen SourceGitHub

Description

MiniCPM is an ultra-efficient language model designed for end devices, achieving significant speed improvements in generation tasks. It leverages advanced training strategies to optimize performance while maintaining a small footprint, making it ideal for various applications in AI and natural language processing.

How to use MiniCPM 4.0?

To use MiniCPM, clone the repository from GitHub, install the necessary dependencies, and follow the setup instructions to integrate it into your application. You can utilize the provided APIs for generating text and processing natural language tasks.

Core features of MiniCPM 4.0:

1️⃣

Ultra-efficient language model for end devices

2️⃣

Achieves 3x generation speedup on reasoning tasks

3️⃣

Supports multiple AI frameworks

4️⃣

Optimized for low-resource environments

5️⃣

Easy integration with existing applications

Why could be used MiniCPM 4.0?

| # | Use case | Status | |

|---|---|---|---|

| # 1 | Natural language processing tasks | ✅ | |

| # 2 | AI-driven chatbots and virtual assistants | ✅ | |

| # 3 | Content generation for various applications | ✅ | |

Who developed MiniCPM 4.0?

MiniCPM is developed by OpenBMB, a team dedicated to advancing AI technologies and making powerful language models accessible for various applications. They focus on creating efficient solutions that can run on standard hardware, promoting wider adoption of AI tools.